10 projects - #4

running form analysis

using a neural network to generate a 3d pose of a runner and analyze characteristics of their form

project goal

Much of running improvement involves physiological changes in muscle development, lactate threshold, VO2 max, etc. However, improving running economy/form can lead to improvement independent of physiology.

For this project, I looked to track the pose of a runner, and then use that tracked data to do basic analyses of elements of running form. I think the project is a little bit of a stretch to do in a single day, but it's still fun to get a start on the idea.

methods

pose tracking

The crux of this analysis was accurate pose tracking. After working on skeletal hand tracking at Leap Motion for nearly 5 years, I know that this is no trivial problem. However, open-source neural-network based pose tracking solutions have become quite good in recent years.

For my desired analyses, I needed a 3D pose output. Facebook Research released VideoPose3D. This network first identifies joint keypoints, and then has a secondary network that outputs the most probable 3D pose for that joint configuration. This is one of the few networks that I was able to find that was able to produce a 3D skeletal model from a monocular camera in an uncontrolled setting. An impressive feat to be sure!

I found that the tracking performed best when the runner was close to the camera and at an oblique angle, when the subject was easy to isolate from the background, and when the footage was captured at a high frame rate.

running form analysis

I hoped to be able to quantify cadence, position of the foot relative to the body when striking the ground, spine angle, and vertical head movement. Unfortunately, a day is not enough time to work on all of these outputs. To see the analysis in progress, and run it for yourself, you can open this Google Colab notebook.

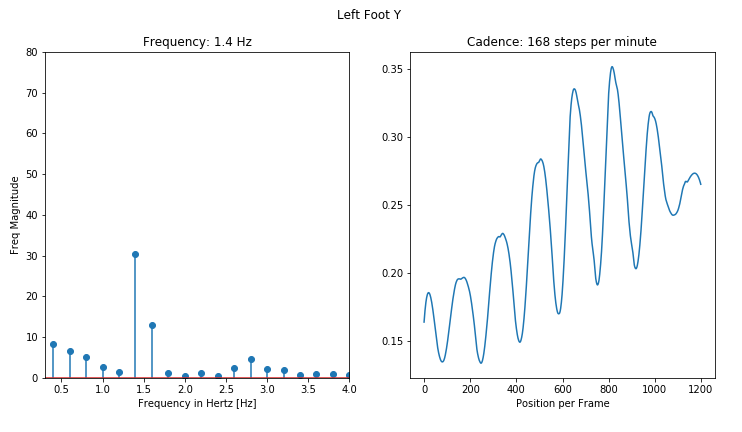

The most successful metric that I was able to compute was cadence. Here, I tracked the x or y position of a foot through time, and found the dominant frequency components in a Fourier transform of the path.

results - pose tracking

These are the tracked pose outputs. The 2D keypoints are visualized on the left side on top of the original video, and the 3D reconstruction is displayed on the right.

alden - jogging

alden - sprinting

shepherd - jogging

shepherd - sprinting

caleb - jogging

results - analysis

From the extracted keypoints, we can plot the cyclic motions of running. Here, we see the alternating motions of a left and right foot during running, and also compare those motions against sprinting.

- Alden Jog.png)

Forward/backward foot motion while jogging

- Alden Sprint.png)

Forward/backward foot motion while sprinting

We can then find the dominant frequency of these positions in order to calculate the cadence of the runner. Here is an example of a foot position while jogging, displaying the fundamental frequencies and extracting the cadence from that frequency in steps per minute. The figure on the right shows the position of a runner's left foot. On the left, this signal is shown in the frequency domain. The spike near 1.5 Hz corresponds to a cadence of 168 steps/min

Frequency and spatial representations of runner's foot position

conclusion

I was hoping to do more advanced analyses beyond cadence. However, there wasn't enough time. I think the idea certainly has potential, but the accuracy of the metrics will only be as good as the tracking data that is analyzed.

The tracking quality was quite impressive. Though it took some effort to optimize the environment for peak tracking performance, the fact that the network can estimate 3D pose with such fidelity from only a monocular camera is very impressive.

what would i do next

- Complete the other analyses that were suggested in the project goal.

- Pull footage of world-class runners from youtube and analyze their form.

- It would be amazing to run some biomechanical analysis based on the skeletal outputs. For example, plot the runner's center of mass over time.